Re: the Social Science section of Holden Karnofsky’s Most Important Century

This post, in a nutshell:

Why I’m Focusing on Most Important Century’s Social Science Section:

The EA Community doesn’t make adequate use of knowledge from the social sciences (and neither does anyone else)

If EA had more familiarity with social science infrastructure, some good things could happen

The Points I’ll Make:

1) Karnofsky says “the fundamental reason social science is so hard to learn from” is that “it’s too hard to run true experiments and make clean comparisons”

➜ I’ll say “the fundamental reason” is closer to “lack of immediate financial incentives”

➜And I’ll note additional structural issues

2) Karnofsky talks about “retirement” a la Age of Em for digital people, and also believes “sufficiently detailed and accurate simulations of humans would be conscious, to the same degree and for the same reasons that humans are conscious”

➜ I’ll say: Based on a quick read of Age of Em’s description of “retirement”, it seems unlikely such a “retirement” would be a satisfactory outcome for many ‘detailed & accurate’ simulations of humans

3) Karnofsky discusses how digital copying would enable a person to have “bonanzas of reflection and discussion” with themselves.

➜ I’ll say: I think this is a really interesting idea, but that its application will be limited unless we improve at changing our behavior via abstract conversation (E.g., I can tell someone how to ride a bicycle, but they’re not going to be able to ride a bicycle after doing so). Consequently, for most of life’s “Big Questions”, gaining abstract knowledge from a digital copy may be no more helpful than advice from another real-life person.

Intro/Context

This post was written in response to Holden Karnofsky’s Most Important Century blog post series. In the series, he makes the argument that “the 21st century could be the most important century ever for humanity, via the development of advanced AI systems.” The series is great, and I agree with his overarching point that universe-altering AI is on the way (or is likely enough that we need to prepare for it). The points I make below are in response to the “Social Sciences” section of his series. These points felt important to make because 1) Karnofsky’s view on the social sciences seem widespread in EA, 2) This view is diminishing the goods that we can gain from the social sciences, and 3) If EA engaged more fully with social science research, there would be great benefits to both sides.

Section I — Re: The Fundamental Reason Social Science is so hard to learn from

In making his argument for why social science is so hard to learn from, Karnofsky specifically uses the example of meditation. He writes:

Today, if we want to know whether meditation is helpful to people:

We can compare people who meditate to people who don't, but there will be lots of differences between those people, and we can't isolate the effect of meditation itself. (Researchers try to do so with various statistical techniques, but these raise their own issues.)

We could also try to run an experiment in which people are randomly assigned to meditate or not. But we need a lot of people to participate, all at the same time and under the same conditions, in the hopes that the differences between meditators and non-meditators will statistically "wash out" and we can pick up the effects of meditation. Today, these kinds of experiments - known as "randomized controlled trials" - are expensive, logistically challenging, time-consuming, and almost always end up with ambiguous and difficult-to-interpret results.

The first point I’ll make here might initially seem irrelevant to Karnofsky’s overall argument and risks seeming snarky, but I swear it’s leading somewhere. Here’s the point: The two bullets above imply that there’s relatively little helpful or quality research done on meditation. However, there’s been an enormous amount of research done on meditation, including RCTs (randomized control trials). This meta-analysis, for example, found 1389 mindfulness-based RCTs published between 2010 and 2019 — and mindfulness is only a single form of meditation. In fact, there’s now an entire field known as Contemplative Science, and meditation is one of the field’s most-researched practices. In terms of exploding research-areas, this is one. Furthermore, the outcomes in many of the meditation-related studies are in no way ambiguous or difficult to interpret. Here’s a link to a page of important papers in the field. And, if you’re looking for an introductory book, Altered Traits: Science Reveals How Meditation Changes Your Mind, Brain, and Body by UW Madison Professor Richard Davidson & NYT Bestselling author Daniel Goleman is a great intro.

I mention all this because the issue is not that social sciences are, as Karnofsky’s post states, “hard to learn from”. Rather: what has been learned from the social sciences is hard to disseminate.

Effectively distributing knowledge so that it may be implemented is an enormous problem – and one that goes beyond the scope of this blog post. However, I mention it in order to make an additional important point. This point is: a key reason we’re not learning & using more from the social sciences is a lack of immediate financial incentive.

So, for example, in my previous post, I described a technique that has been studied in the social sciences for over twenty years. Its use would have enormous economic impacts — that is, if it were used more frequently. For instance, one application of the technique described in the previous post resulted in students attending more days of school and having better learning outcomes. However, the economic impact of the technique isn’t immediate, so it’s not something a politician can run on. Additionally, as something with time-delayed benefits, it falls into the “when it’s working, we don’t notice it” problem. A great number of findings from the social sciences are like this: Their economic impact is not immediate or straightforward to measure (e.g., “What’s the economic impact of happiness?”). Therefore, people aren’t exactly rushing to disseminate and implement the findings.

Now, someone might here say: But the planet has cumulatively spent billions funding the social sciences. However, that funding is billions less than the areas Karnofsky cites as progressing more quickly — viz., our development of cheaper, faster computers & more realistic video games. Of course, it could be argued that spending on those products is sustainable (e.g., they have a market, etc). However, the savings we could attain from social science findings fcould be of incredible economic benefit (e.g., as the byproduct of better education, workforce training, etc.). The issue, again, is that these benefits are not as immediate or obvious.

Someone here might also say: “Well, if the findings are so great, why isn’t anyone doing anything about it?” This is in part, again, an issue of resources: who is going to be paid to do that? It’s also an issue of job scope: Busy academic researchers have more pressing job priorities than figuring out how to disseminate their research. Doing so is also a different skill set. Video game companies have hundreds to thousands of video game marketing staff to get the word out about their products. Academics usually have a few overburdened graduate students.

In short: I completely agree that studying humans is tricky. However, so is building a digital human out of a computer – something that Karnofsky (and now I) thinks is likely enough to write an entire blog series about. If as much funding and thought were going into doing the former, it would be successful even more quickly (I believe) than the latter.

Section II — Re: Retirement for Digital People

Karnofsky writes:

I believe sufficiently detailed and accurate simulations of humans would be conscious, to the same degree and for the same reasons that humans are conscious.

He also writes:

Digital people….could make copies of themselves (including sped-up, temporary copies) to explore how different choices, lifestyles and environments affected them. Comparing copies would be informative in a way that current social science rarely is.

What would happen to these temporary but conscious digital people? Later in the text, Karnofsky explains “temporary digital people could complete a task and then retire to a nice virtual life, while running very slowly (and cheaply).” For details on this“retirement” Karnofsky’s post points the reader to the “retirement” section in Age of Em: Work, Love, and Life when Robots Rule the Earth.

Except, the retirement described in Age of Em sounds far from utopic. First, it has to be paid for. From Age of Em:

“Because the cost of running an em is proportional to speed over a wide range, the cost of retiring an em to run indefinitely at a slow speed can be small relative to the value that an em produces just in a few days of work…. Note, however, that these calculations ignore the cost of moving to a new retirement location, and they depend on inter rates remaining high.”

Secondly, the quality of the retirement itself may not be that great. Again, from Age of Em, about the possible plight of those who have paid for a slower retirement:

“Slow em retirees share many features with people we see as “less alive,” including ghosts. Not only are they literally closer subjectively to dying because of civilization instability, their minds are also more inflexible and stuck in their ways. Compared with faster working ems, slower retirees have less awareness, wealth, status, and influence, and they are slower to respond to events, including via speaking words or coordinating with others…”

This last quotation brings up another important issue to explore: the issue of a digital person’s “storage” unit being destroyed (e.g., perhaps by a glitch or a tornado). What’s the moral consequence here? Or, to make it more personal: How much effort is going to have to be put into making sure that all 300,000 conscious copies of you aren’t destroyed by a glitch?

I think there are very possibly technological or philosophical work-arounds to the issue of creating conscious, digital creatures in the context of “retirement.” It just, seems, well, tricky.

Section III: Re: Bonanzas of reflection and discussion

Karnofsky’s bullet at the end of the Social Sciences section reads:

Bonanzas of reflection and discussion. Digital people could make copies of themselves (including sped-up, temporary copies) to study and discuss particular philosophy questions, psychology questions, etc. in depth, and then summarize their findings to the original.10 By seeing how different copies with different expertises and life experiences formed different opinions, they could have much more thoughtful, informed answers than I do to questions like "What do I want in life?", "Why do I want it?", "How can I be a person I'm proud of being?", etc.

First, as with many of the ‘good outcome’ scenarios Karnofsky lists in his posts, this one sounds great to me.

I have one small response here, which I make less because I want to be nitpicky with a point that Karnofsky seems to be making in a generative tone rather than in a conclusive one, and more because it contains an assumption I notice frequently in EA discussions. Namely: That because we know something, we can/will act on it. I think this may be in part because EAs – in many areas our lives at least - do tend to act on what we know.

At the same time, we of course know that people don’t/can’t always act on abstract knowledge. For example, we know:

Giving some a detailed explanation of how to ride a bike is not the best way for them to learn how to ride a bike.

People may believe/know something is “wrong” – and then do it anyways.

Sometimes we hear people say things – but do not fully understand their perspective.

I think this last example is the most relevant to Karnofsky’s “bonanza” scenario. If a digital copy became too different from you, you might not be able to fully grok the lessons that it is bringing to you. It might be no different than a good friend telling you the same information.

That’s not a reason to not do this. Instead, it’s just an indicator that to optimize a bonanza session, we’d need to improve our understanding of how to communicate & educate effectively. It’s also maybe something to weigh in the context where digital copies are an option – what are the ‘alternate paths’ it would make the most sense for them to take? When would it not be worth it (e.g., especially given the issues that come with creating another conscious being)?

Conclusion

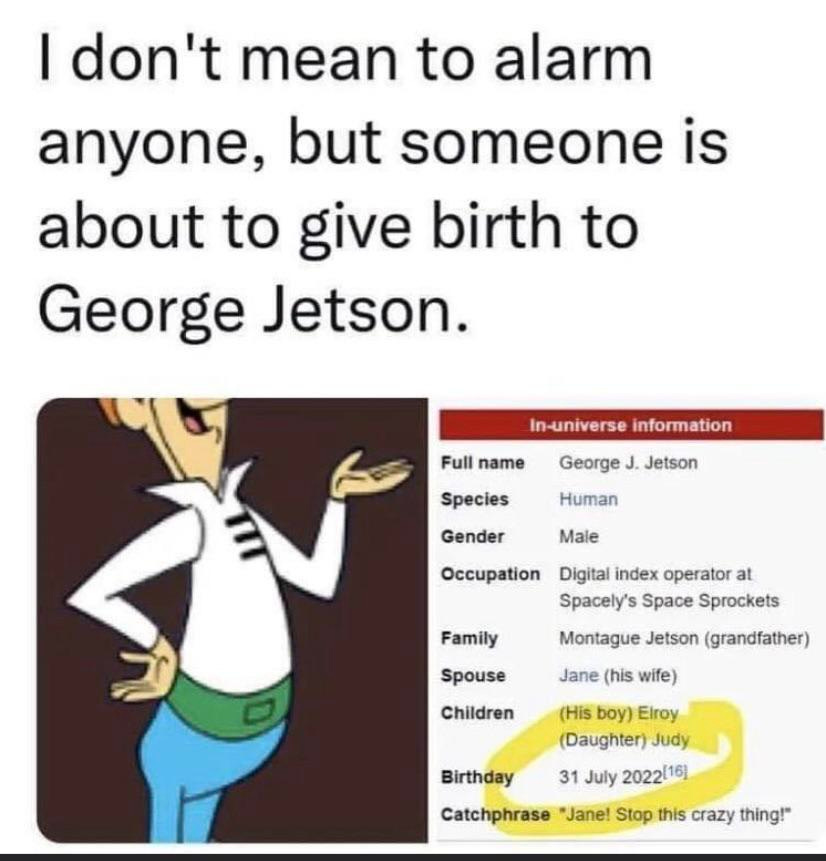

I would like to conclude by saying that, appropriately, today is allegedly George Jetson’s date of birth. HAPPY DATE OF BIRTH GEORGE JETSON*!